Color image processing technology

The strength of KURABO resides in its image processing system for distinguishing color differences, as well as its data processing technology which does not depend on a driver.

KURABO utilizes image processing technology that matches the color identification results from mechanical systems and from the human eye. Having developed a high-level data processing system for smoothly handling large capacity images, KURABO contributes to society with various kinds of inspection equipment and printing systems.

KURABO’s unique technology: Polar Coordinate Extraction Method

KURABO’s unique color extraction method, the Polar Coordinate Extraction Method, features strong resistance against changes in light quantity.

The human eye responds to changes in ambient brightness when recognizing an object color. So humans can determine the color without being affected by the amount of surrounding light. This ability is closely related to the perceptual color coordinate system. Colors can be represented by various physical color coordinate systems (RGB values, XYZ tristimulus values, etc.) and perceptual color coordinate systems (hue, brightness, saturation, etc.). The perceptual color coordinate system has four parameters: 1) bright - dark (brightness), 2) vivid - dull (saturation), 3) color tone (hue), and 4) dark - light.

Of these, effectively using the two parameters that are not affected by light quantity - color tone (hue) and dark - light (universal parameters for light quantity changes) - KURABO created its unique Polar Coordinate Extraction Method for color extraction.

■ Polar Coordinate Extraction Method (scheme)

■ Polar Coordinate Extraction image (Example)

KURABO’s data processing technology easily handles large capacity images.

To print images, it is necessary to use the RIP (Raster Image Processor) system, to convert print data described with commands into dot data. In some cases, printing is disabled by the limitation of the printer driver. However, KURABO’s Aupier-copy enables printing of large format images disabled by ordinary printer drivers, because it directly operates the printer without using the printer driver.

TipsColor extraction (image processing) and large format image processing

Tips1.

What is color extraction?

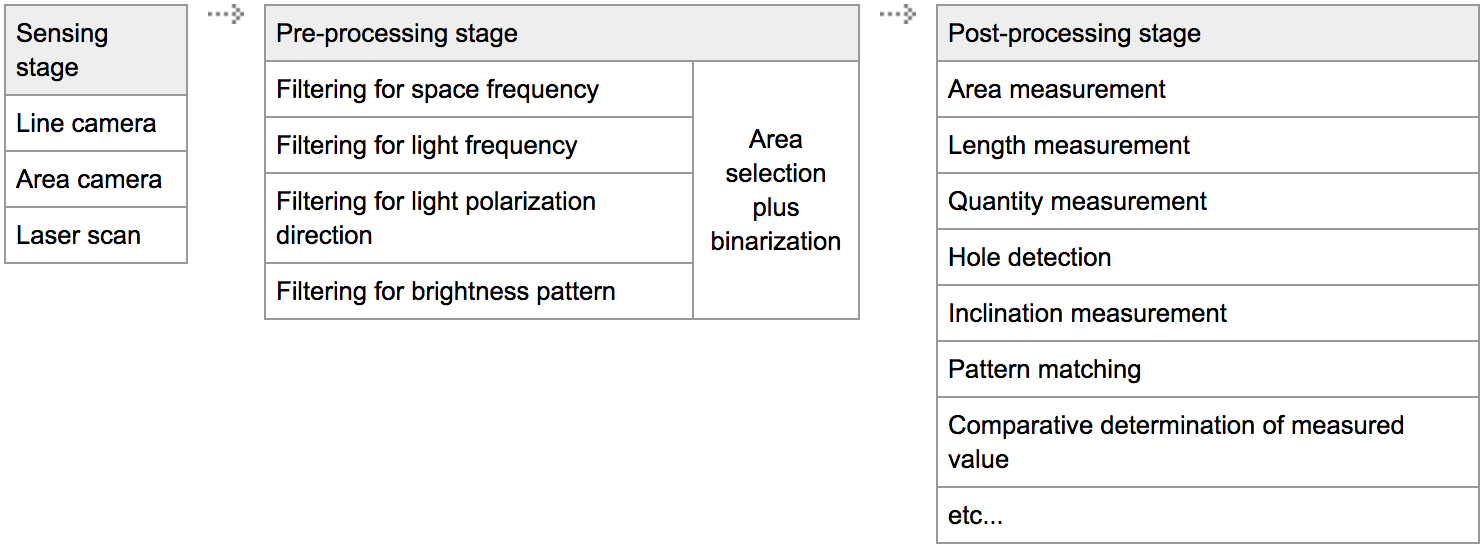

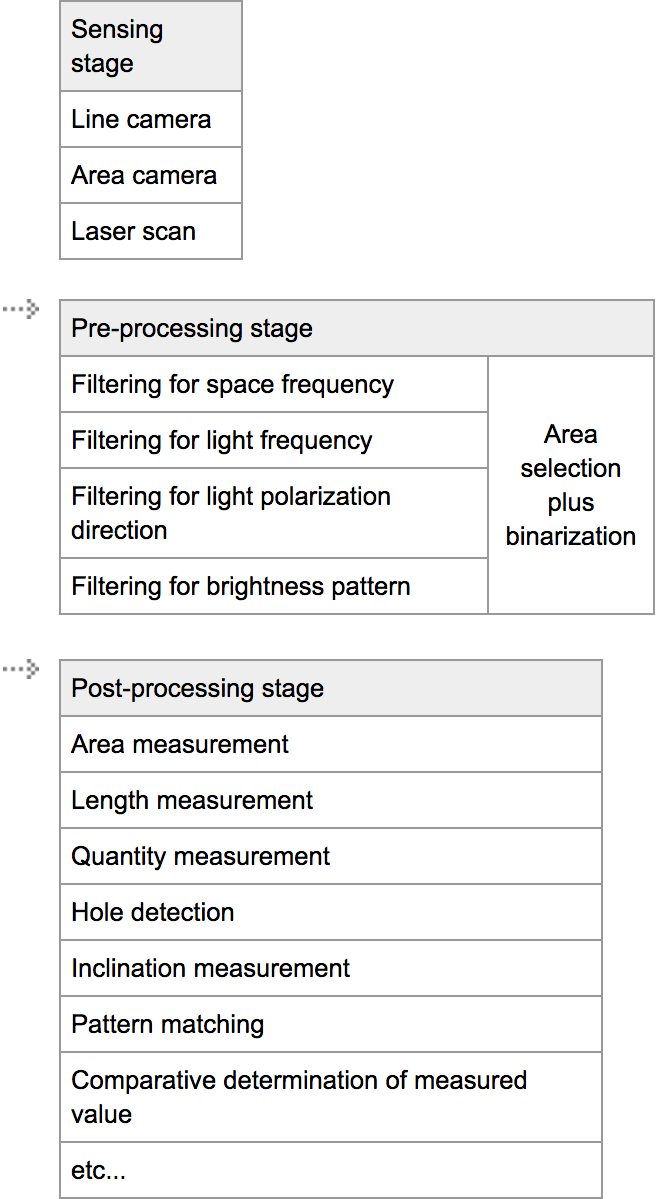

The flow of general image processing used in factory automation (FA) is roughly classified into:

the sensing stage, in which an image is captured;

the pre-processing stage, in which the feature quantity is extracted; and

the post-processing stage, in which a measurement is made to determine the result.

In the pre-processing stage, it is best to output the minimum amount of information needed to facilitate measurement taking and acceptance- and rejection-related decisions in the post-processing stage.

Fig.1 : Flow of general image processing

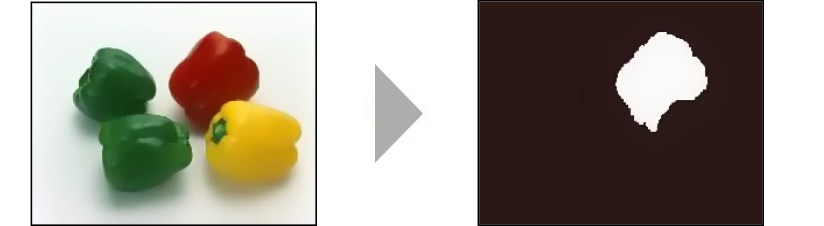

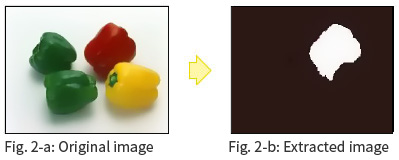

Color extraction is one of the pre-processing techniques used for color image processing. In this process, specific colors are separated from the other colors of the color image (original image) obtained from a color camera. As shown in Fig. 2-a, for example, when the yellow area is extracted from the original image of a 3-color green pepper imaged with a color camera, the resulting image (extracted image) is obtained as shown in Fig. 2-b.

As a result, the original image has information content of 256 tones (8 bits) x 3 colors (RGB) x number of pixels, while the extracted image has information content of 2 tones (1-bit monochrome) x number of images, which is equivalent to one 24th the above information content, which puts less load on the post-processing stage.

When inspections are carried out with actual FA usage, however, a number of elements exist that can disturb the results, including fluctuations of the line, unevenness of illumination, and surface irregularity of the object itself. If the pre-processing method is not appropriate, these elements will lead to unstable inspection results. Therefore, the pre-processing method used for image processing is critical.

Tips2.

General color extraction methods

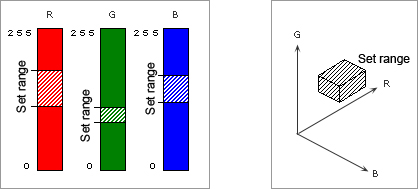

RGB method

When color image processing systems for FA use started to be commercialized, the RGB method was widely employed. With the RGB method, a range is set for each of the RGB signals and the colors falling in that range are extracted (see Fig. 3). For this method, the same processing used for monochrome is simply applied to images of R (red), G (green), and B (blue). Therefore, the hardware can be very simply configured, but it is directly affected by changes in illumination brightness, etc., as with monochrome image processing.

Fig.3 : RGB method

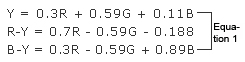

Image color-difference signal method

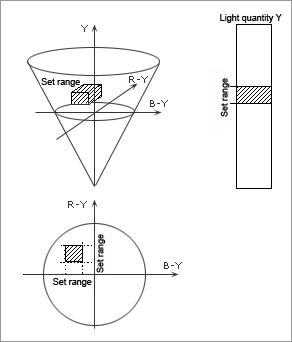

Subsequently, the image color-difference signal method came onto the market. In this method, a color range is specified for an image color-difference signal. More specifically, based on the vector scopes of image signals, the upper and lower limits are set for the brightness signal Y, color signal R-Y, and B-Y value in equation 1, and then the colors falling in the set range are extracted (see Fig. 4).

This method allows thresholds to be specified for color components independent of brightness Y, which is a light-quantity component. It has the advantage of being less affected by changes in light quantity than the above RGB method.

The relationship between color differences as sensed by humans and distances (i.e., color differences) expressed by this color space lacks linearity. Therefore, colors that are determined to be clearly different by humans may be processed as unidentifiable.

Fig.4 : Video color-difference signal method

Tips3.

Polar Coordinate Extraction Method

When humans determine the colors of an object (assuming general color recognition under normal illumination rather than sophisticated color measurements), they quite adequately recognize them even if the ambient brightness changes. For example, red is recognized as red regardless of the brightness and this is also applicable to thin colors. This means that humans have different evaluation parameters from light-quantity components and quite easily determine them.

The color coordinate system, which is also known as the perceptual color coordinate system, is closely related to these abilities. Colors are expressed either by using the physical color coordinate system (based on wavelength distribution or absorbance) in which RGB values, XYZ tristimulus values, or the like are used, or by using the perceptual color coordinate system in which hues, brightness, saturation, etc. are used. The perceptual color coordinate system has four parameters: bright vs. dark (brightness), color shades (hues), vivid vs. subdued (saturation), and thick vs. thin.

Among these four parameters, which ones are not affected by changes in light quantity? Vivid vs. subdued (saturation) is affected by changes in light quantity because colors look more vivid as they become brighter. Bright vs. dark (brightness) is brightness itself and is, naturally, affected by changes in light quantity. The parameters that are not affected by light quantity are color shades (hues) and thick vs. thin. We have developed our original color extraction method called the polar coordinate extraction method by effectively using these two parameters (universal parameters for changes in light quantity).

Tips4.

Multi-level image of binary picture

Digital images are usually created by the number of individual pixels (dots of image data) and they can be classified as "Monochrome Images" or "Color Images". However from a digital data point of view, they could be also described as "Binary Images" or "Multilevel Images".

Binary Image

"Binary Images" are digital images that all dots or pixels are either of two values, "black" or "white".

Is a binary image the same as monochrome?

Monochrome image is sometimes called "black and white", but technically this is not completely correct. See below for description of "Multilevel Image" to understand the differences.

Multilevel Image

All images which are not binary image are Multilevel images. Not good enough to your question?

First, let's talk about the image represented by an "8-bit format".

Usually a binary image is one that consists of pixels of "black or white". White or Black is represented as "1 (One) or 0 (Zero)" as image data. In other words, this is a "1-bit format". 8-bit is 8 of 1-bit in a row, which is 2 to the power of 8 = 256 levels of representation.

If the 8-bit image represents "color", then it is defined as "256 levels of color". "Grayscale" is a range of shades of gray represented by black and white, therefore grayscale is not either multilevel or a color image.

In a computer, color is represented by the combinations of "R G B (red, green, and blue)" , which are primary colors. When each primary color is classified into 256 levels, then 16,770,000 colors (256 to the power of 3) will be represented. This is commonly called "Full Color".

The Multilevel image data consumes far more memory or data storage than a binary image data for the same image and the same physical dimentions. (8-bit is 8 times more data than Binary, Full Color is 3 times more data than 8-bit.)